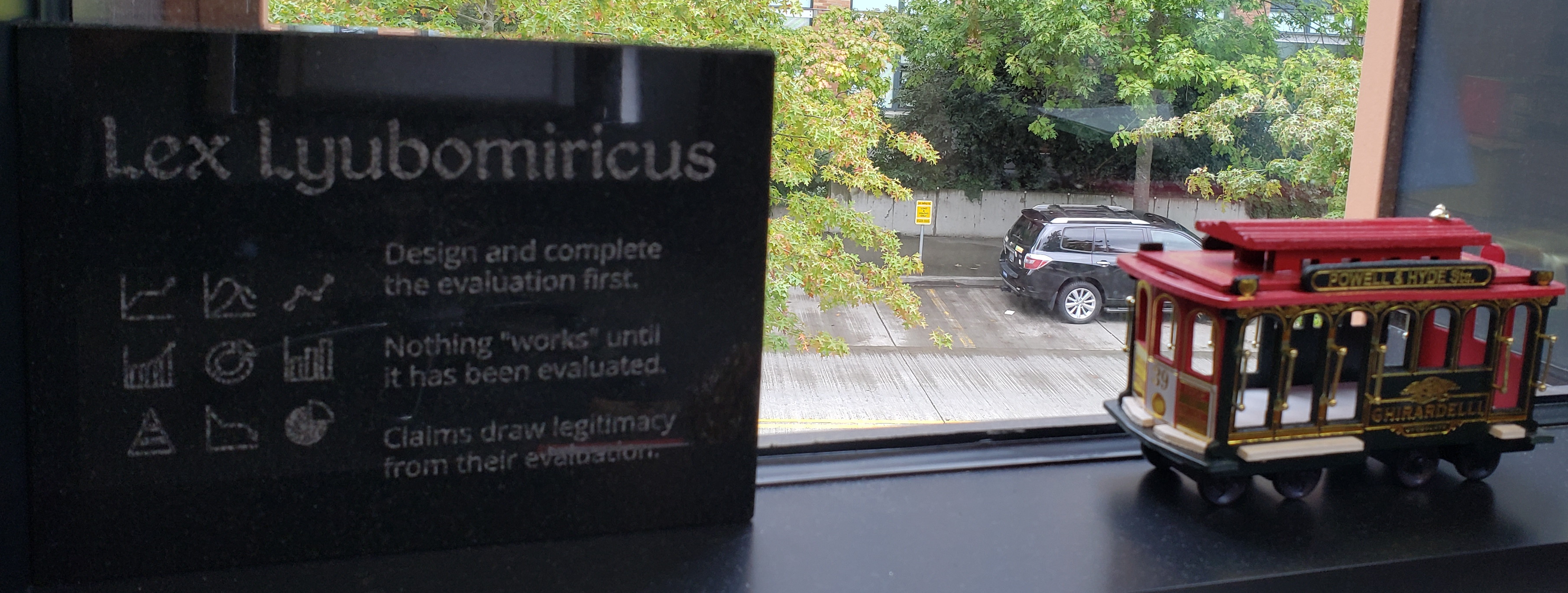

Lex Lyubomiricus

Over the course of several research projects, I have come to develop a very simple doctrine for ensuring that our work remains on track and that we will be able to bring our results to publication. This really all amounts to a single principle, which my adviser Prof. Zach Tatlock jokingly dubbed "Lyubomirsky's Law." So as to be maximally pretentious, I have called it lex Lyubomiricus and it is this:

The prize, in this case, is being able to publish a paper. In my view, the greatest obstacle is usually having convincing evaluation, which consists of an empirical demonstration of one's contributions that should persuade a skeptic that these contributions are, in fact, valuable. I am of the opinion that designing and performing experiments is generally more difficult and sensitive than the other tasks needed to bring a research paper to publication, such as describing the contributions or assembling the related work, though these are also essential.

My reasoning for prioritizing the evaluation is simply that without the evaluation, there cannot be a defensible paper, whereas any written sections can at least be filled in perfunctorily and may still be able to be revised if the evaluation is convincing enough. (Of course, a badly written paper may be rejected precisely for being badly written, but that is a matter of degree.) Perhaps this is an entirely psychological barrier, but I am convinced that if the empirical foundations for a work have been established, the writing can be done much more quickly to support it; the evaluation is the crucial threshold to clear.

The rest of the "doctrine" of lex Lyubomiricus consists of corollaries of the above-listed principle (in no particular order):

- Finish the evaluation first.

- Begin by considering what would be the least amount of evaluation that you think you could reasonably submit for peer review. Do not worry about anything else until you have that.

- The "least" amount of evaluation is the simplest experiment that would tell you if your basic claims are wrong. If your initial hypothesis is wrong, it would be best to know that as soon as possible.

- Prioritize what is likeliest to give demonstrable results the soonest. If you fall behind schedule and the deadline is approaching, you can consider if the results you already have could justify a submission.

- Pick your target deadline based on how long it will take to perform the evaluation.

- Ensure you can describe how you obtained your results and what they mean. This will form the paper's evaluation section.

- When writing the paper, ensure all claims draw legitimacy from the evaluation.

My experience is in doing work with an empirical component; the considerations are likely to be different for papers whose contributions are theoretical. I make no claims of originality, but I find I repeat myself often enough that it's worth writing it up. I welcome any feedback.

Panchekha's Principle

My former colleague Pavel Panchekha has promoted a related principle for setting up systems evaluations, which I include here with his permission. Because research systems are constantly undergoing changes and may need to be redesigned as a project goes on, they are very prone to the accumulation of bugs. Suddenly finding that one's system breaks right before a paper deadline is always a very emotionally faught experience and can undermine a submission at the last minute. To guard against such a possibility, Prof. Panchekha proposes the following view:

Namely, one should not consider the system evaluation to "work" until there is a "nightly" for it (a set of tests that runs automatically every night). Prof. Panchekha employed nightlies for his Herbie publications and nightlies have been helpful for my own experiences with TVM Relay and DTR. Having a nightly run ensures that the most important code for the paper is constantly being exercised and alerts the group quickly to any serious errors; additionally, maintaining the infrastructure for the nightly has many benefits in terms of encouraging good software engineering practices and documenting important information for reproducibility. (For example, I have definitely found that having infrastructure like the Relay dashboard has helped quickly add new experiments when necessary.)

Of course, it may not be possible or feasible to run one's entire project evaluation every night (there may be physical components involved or the computing resources necessary may cost too much or the full evaluation takes days to run), but one should take Panchekha's Principle as a guideline: Software components of the research should be frequently exercised to keep last-minute surprise bugs to a minimum, ideally with automatic tests. Additionally, with the increasing prevalence of artifact review, having the infrastructure for nightly runs helps tremendously for preparing artifacts; it is good to make that effort up front.

Why write it up?

Many of these principles may seem obvious and to follow directly from a schoolbook's description of the scentific method. I have found, though, that in computer systems research in particular, the distinction between designing and implementing the system itself and evaluating is often blurred. One can become consumed in implementing a system and adding features and even performing some sanity checks, but it will be unlikely to impress peer reviewers unless it can be adequately evaluated against its core claims, particularly in comparison to alternatives. Hence, we may consider another formulation of lex Lyubomiricus:

These laws have been carved in stone courtesy of my adviser. I have always joked that our research should be archived in stone, the best of all archival formats, and I am enormously grateful to Zach for actually doing it in the case of lex Lyubomiricus.